Functional Modeling & Cause and Effect Chains

A Powerful Toolkit for Root Cause Failure Analysis

The Twin Boeing 737 Max 8 Crashes

In my previous article, we took a quick look at the Boeing 737 Max 8 aircraft crashes of October 29th, 2018 (killing all 189 on board) and March 10th, 2019 (killing all 157 on board). Ethiopian and Indonesian authorities had tied these disasters to a faulty angle-of-attack (AOA) sensor. It was my opinion during the writing of the initial article that, in the long run, the crashes would turn out to be the result of much more than the failure of a single sensor. Further analyses by multiple experts since then have proved that correct. In this article, we will discuss the methods that a root cause failure analysis expert would use to analyze such a crash or perform failure anticipation analysis for the purpose of avoiding crashes altogether. A quick review of the previous article will help us better understand the analysis to follow. By way of a quick summary, the initial investigations had shown that faulty angle of attack (AOA) sensors told the automated flight control systems (AFCS) that the aircrafts were climbing too steeply and were thus in danger of stalling. In response, the AFCS pitched the aircraft noses downwards, in a maneuver that the pilots could not counteract, driving the 737 Max 8s into the ground.

Root Cause Failure Analysis (RCFA)

I am not an aviation expert, but system engineering and systematic innovation, which are my fields of expertise, offer a plethora of tools that can be used to understand systems, their potential failure modes, and root causes associated with those modes. The most effective means for truly understanding engineering systems is, by far, a coordinated use of Functional Modeling (FM) and Cause and Effect Chains (CEC). Keep in mind that while you do not need to be an expert on the system to which you are applying FM and CEC analyses, you do need to have the right level of access to those who are experts on the system under study to achieve an effective outcome. The examples below have been (perhaps overly) simplified, but hopefully they still convey the power and effectiveness of this toolkit.

Functional Modeling (FM)

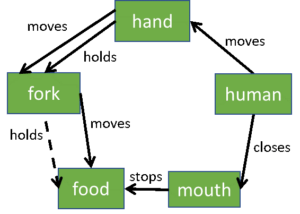

FM is a way of showing the functioning relationships between the various physical components that make up a system. For example, functionally modeling the steps required to place food into your mouth by way of a fork might appear as follows:

1. Hand holds fork

2. Human moves hand

3. Hand moves fork

4. Fork holds food

5. Fork moves food (into mouth)

6. Human closes mouth

7. Hand moves fork

(out of closed mouth)

8. Closed mouth stops food (sweeps food off of fork within mouth)

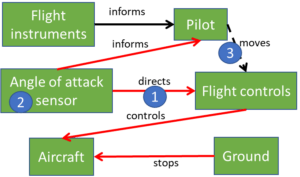

By the way, solid lines are sufficient (function performed at just the right amount), dashed lines are insufficient (function not performed as well as we would like; in the case of the fork I indicated that the fork insufficiently holds the food because sometimes food falls off of a fork), double lines are excessive (function is over performed), and red lines are harmful (representing a function that is harmful to the system or its users). In functionally modeling the initially identified failure modes (faulty AOA sensors were focused on by investigators) for the 787 Max 8 crashes, we could create the following functional model. Notice that the AOA sensor is shown to harmfully direct the flight controls (because the AOA was in error), which in turn harmfully control the aircraft (because the flight controls were given bad information).

Even a quick study of the functional model would bring out several curious circumstances. First off, why was the AOA sensor allowed to ultimately tell the aircraft what to do? Secondly, why was a single AOA sensor allowed to ultimately control the aircraft? What happened to the long-followed industry dictate of backed-up and cross-checked systems? Thirdly, why was the pilot unaware of how, or unable, to overcome or override the AFCS to the point of catastrophe?

Cause and Effect Chain (CEC)

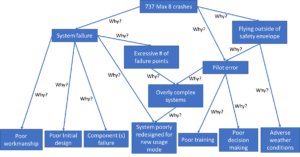

Now let’s switch to the separate, but related, CEC for the 737 Max 8 crash scenarios. While a FM is like a crime scene investigation (it shows what happened), a CEC tells why it happened. The CEC makes a statement (top box) and then seeks to identify the root causes (bottom boxes) that resulted in the outcome reflected in that initial statement. For this analysis it makes sense for the initial statement for our CEC to be “737 Max 8 crashes.” We then explore the various reasons that might have caused those crashes keeping in mind that the root causes can be independent, dependent, or both (depending on the specific scenario modeled).

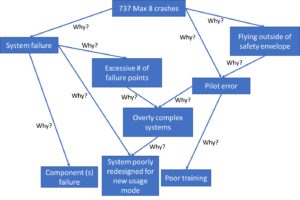

If you are an experienced pilot or aeronautical engineer, you might have additional ideas, or combine these ideas in different ways, but the CEC below demonstrates the general process and does a decent job of describing all the mechanisms by which the 737 Max 8s may have crashed. This CEC was created prior to the completion of the first article in this series, and before many details were understood about the tragic events, and yet substantively captures possible failure modes, and their potential combinations, leading to the crashes of the Max 8s. It is important to note that the CEC is fairly generic and could practically be utilized as a perfectly fungible replacement for a similar CEC where the top box instead states, “Tesla auto-pilot crashes.” The only root cause listed that is not substantially generic is “System poorly redesigned for new usage model” which I added after comparing pictures of a 737 and a 737 Max 8 and observing the somewhat obvious design deltas (engine size, engine positioning, fuselage length, etc.). However, it was because of the insight gained from the creation of this initial CEC that I was able to confidently predict in the first article that it is highly unlikely that a single AOA sensor failure brought down those aircraft. While reviewing the CEC, notice that there are three first level causes for the Max 8 crashes: system failure, flying the aircraft outside of its safety envelope, and pilot error. The pilot error could be a direct cause of the crashes or a reason that the plane was flown outside of its safety envelope. In exploring the root causes captured on the CEC (bottom set of boxes), and now having more information about the crashes, we can eliminate some of the potential root causes. For example, we can eliminate poor workmanship, poor initial design, poor decision making, and adverse weather conditions. This leaves us with the now truncated CEC shown below.

Root Cause Analysis Conclusion

We know that one of the two AOA sensors failed (component(s) failure), but a sensor failure, especially a redundant one, should not have brought down those aircraft. We also know that the Max 8 is actually a modified 737. Therefore, system poorly redesigned for new usage model is a definite possibility, and this is also where Boeing likely got into trouble. Below are two aircraft pictures, the top one is the original 737 and the bottom one is the redesigned 737 Max 8. Notice how larger engines were installed to improve the performance of the now longer (more seating) aircraft and those engines, being larger in diameter than the original 737 engines, had to be mounted forward and higher than the original 737 design called for. This new engine positioning shifted the center of thrust forward of the plane’s center of gravity, thus causing the aircraft to pitch upward during heavy thrust periods – such as during take-off. To counter this upward pitching (and thus to avoid stalling), Boeing installed a software system to automatically push the aircrafts nose back down when the angle-of-attack sensor showed that the aircraft was risking a stall. Software used to counter bad physical design is rarely a good idea, especially when it can be triggered by a single sensor failure. Then, to make a bad situation worse, the details of the Max 8 redesign were not well publicized, presumably so as to minimize any significant recertification of the original 737 airframe. The 737 certified pilots were told that the Max 8 was “pretty much the same as the old 737” and were thus unaware of the software changes that would drive the aircraft downward in certain scenarios. It also goes without saying that these pilots therefore did not know how to respond to the nose down attitude of their aircraft as they did not even understand why the aircrafts were going into dives in the first place. So, the 737 Max 8 pilots had poor (or little) training on the new systems. As a result, all three of the root causes, appearing in the bottom row of the truncated CEC, were partially responsible for the crashes.

In summary, the 737 Max 8s that crashed had:

1. angle-of-attack sensor failure

2. software patches slapped onto poor physical designs

3. a dynamically unstable aircraft

4. catastrophic single point failure potential

5. and software patches that were unknown to the pilots, thus rending those pilots incapable of countering the ill-fated automated maneuvers.

As expected, these crashes were indeed not due to a single angle-of-attack sensor but rather due to: component failure, poor system redesign (unstable aircraft), the use of software to “patch” a hardware design problem, and an enigmatic management decision to expedite the approval process. An almost unbelievable set of design and decision failures.

The Max 8 crash FM and CEC were purposely simplified to fit within the requirements of this succinct RCA analysis. Real-life FMs and CECs (not simplified for illustration purposes) are usually larger in scope, more detailed, and more highly coordinated than shown here. If you would like to learn how to employ Functional Modeling (FM) and Cause and Effect Chains (CECs) for the root cause analysis of system or component failures, give us a shout. Eogogics offers tailored Root Cause Failure Analysis (RCFA) workshops that let you work on problems similar to those encountered on your own job.