SDN/NFV: Enhancing Network Capacity and Functionality

SDN/NFV: What’s Motivating the Development of SDN and NFV?

Many new technologies are being developed to address problems in modern day networking. These problems stem from changes in usage patterns (especially growth in mobile computing) and difficulties in rapidly provisioning services and bandwidth with older technologies such as SONET/SDH. In general, the movement is toward analyzing and operating networks in terms of control planes and interfaces, with the goal of faster provisioning in a dynamic world, that is, making network bandwidth available when and where it is needed. This problem is becoming more acute with ever-increasing demand for bandwidth and new high-capacity networks (~400 Gbyte/sec). The major technologies in play are:

- Generalized Multi-protocol Label Switching (GMPLS)

- Automatically Switched Optical Network (ASON)

- Ethernet (new high-speed versions)

- Next generation network (NGN)

- Optical transport network (OTN)

- Software Defined Network (SDN)

- Network Functions Virtualization (NFV)

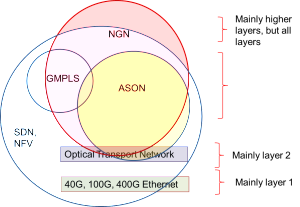

There is much overlap in the solutions that these technologies provide; but all are directed to the goal of better network utilization through faster provisioning. The approximate relationship of these new technologies is shown in Figure 1. In this article we shall concentrate on SDN and NFV.

Figure 1. Approximate Relationship of New Networking Technologies

SDN and NFV are technologies designed to tackle related problems in networking and data center operation:

- Proliferation of specialized equipment complicates network and data center Operation and Maintenance (O&M)

- Difficult to reconfigure wide-area networks or data centers to respond to new or changing needs

- Slow to roll out new services

- Costly to throw out old boxes and buy new

Virtualization: Key to Both SDN and NFV

The boundary between SDN and NFV is rather fuzzy, but both utilize the concept of virtualization, and both employ generic high-performance hardware that can be purchased inexpensively and in large quantities. This hardware is called “white boxes” or “bare metal”.

Virtualization.

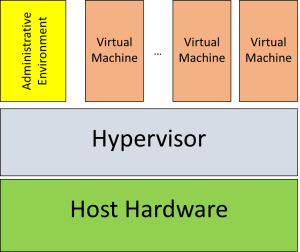

The idea behind virtualization is to make something look like something else to the user by leveraging software. For example, one could make an Apple computer look like a PC to the user—same commands, displays, and so forth. VMWare® is a well-known example of virtualization software, which presents a completely virtualized set of hardware to a guest operating system. That is, an operating system can be installed on a machine running VMWare, and think it is running natively on hardware including a particular CPU, memory, and video adapter, when in fact all of these are just offered to the operating system (in effect fooling it) and the actual hardware may be completely different. This approach has a significant advantage, namely that the guest operating system and computing environment, including all the user’s programs and data, can be moved to a totally different set of underlying hardware and not skip a beat. So the user would not perceive any difference, except that programs might execute faster or slower. Note that many such “virtual machines” can run on a single underlying set of hardware. Virtualization of this sort is enabled by a bit of magic—which may be software, hardware, or firmware—called a Virtual Machine Monitor (VMM), more commonly known as a “hypervisor”. Figure 2 illustrates the general idea:

Figure 2. Virtualized Computers Using Hypervisor

White Boxes or Bare Metal.

These are generic computing and switching elements that utilize components that can be manufactured inexpensively and in large quantities, such as CPUs and memory. They are designed to operate at extremely high speed and to be configurable under software control. The difference between this hardware and standard devices from Cisco and other manufacturers is that these white boxes come with an operating system but not the usual (proprietary) control and switching software/firmware. They usually rely on open source software to configure and operate them.

In the context of SDN and NFV, the goal is to make the generic hardware—bare metal or white boxes—look like switches, routers, and other network hardware by programming. That is, to virtualize the functions of these devices. Of course, the devices can be easily reprogrammed and reconfigured, which means that the network and resources that a user sees can be rapidly changed. John Chambers of Cisco has said, “Cisco’s principal competition is to come from ‘white box’ solutions — that is, open source software running on generic server and switch hardware, rather than the proprietary solutions from incumbent providers such as Alcatel-Lucent, Ericsson AB, Huawei Technologies Co. Ltd., and Juniper Networks Inc that currently dominate the market.”

Software Defined Networking (SDN)

What is SDN?

The name “Software Defined Networking” tells the story: software is used to define or create a network of resources with some desired set of characteristics. More formally, SDN may be defined as an approach to computer networking that allows network administrators to manage network services through abstraction of lower-level functionality. This is made possible by virtualization and the white boxes. Key elements of SDN are:

- Network resources are controlled by some sort of dashboard or automatic mechanism

- Lower-level devices (switches, wavelengths, etc.) are reconfigured by software rather than hardware techs

- Virtualization is employed through use of software to configure hardware so that it acts like desired target device

Benefits of SDN

- Faster provisioning

- Lower overhead

- Reduced complexity

- Simplified networking

Note that SDN is an infrastructure technology, used by carriers and ISPs to build better networks, just like DWDM. It enables better, faster provisioning of services. It is not an end-user service—you can’t buy SDN, only services enabled by SDN.

Flavors of SDN. SDN can be implemented in the Wide Area Network (WAN) or the Data Center. We will consider both.

Wide Area Network SDN

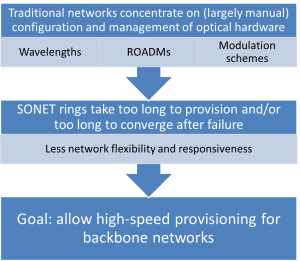

WANs or backbone networks have the goal of delivering connectivity to lower level entities such as Internet Service Providers (ISPs). In today’s environment, there is often a need to quickly add or change service. But networks based on older technology, such as SONET, are not well suited to this, as shown in Figure 3.

Figure 3. Wide Area Network Problem

SDN in the WAN seeks to address this by giving network operators the ability to manipulate critical network parameters under software control. An example of this ability, the “SDN Cockpit”, is shown in Figure 4, which would enable operators to modify modulation schemes, symbol rates, wavelengths, and so forth. We are, however, not there yet. In addition, network vendors need the ability to reconfigure their networks quickly, and this comes from virtualization of switches, routers, and other network devices; but that shades into NFV, which will be covered later.

Figure 4. Goal of Wide Area SDN: the SDN Cockpit (not yet a reality)

Data Center SDN

The Enterprise data center is where SDN is best known, and most advanced in implementation. When SDN is discussed, it is usually in the context of the data center. The common Enterprise data center problems that SDN is designed to attack are well known, and include:

- Existing network infrastructures can respond to changing requirements for management of traffic flows but process is very time-consuming

- Protocols designed for unreliable WANs are inefficient for use inside highly reliable data centers

- There is a need to handle rapidly changing resource, QoS, and security requirements of mobile devices

- Server virtualization does not work well with traditional architectures, e.g., with VLANs

Planes and Their Functions

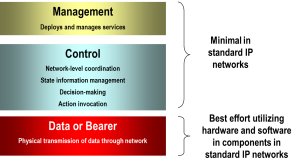

The idea behind SDN in the Enterprise data center is to leverage virtualization and white boxes, as well as utilizing the distinction between network planes. Network planes are a way of logically separating network functions in order to facilitate network control and configuration. Refer to Figure 5, which shows the three planes commonly used to discuss network functionality.

Figure 5. Network Planes

The function of the planes can be summarized as follows:

- The Control plane is abstraction of all programs that control a network, including routing, monitoring, route calculation, fault handling, and provisioning.

- The Data or Transport plane is the hardware that actually does the work, including switching, framing, and low-level error control.

- The Management plane decides overall network policies, including pricing, services offered, and performance specifications.

SDN in the Data Center, North/South-Bound Interfaces

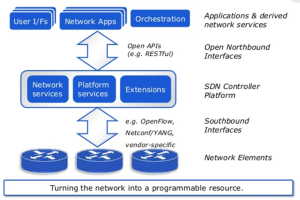

The basic idea of SDN in the data center is quite simple: Each application “sees” a network configuration based on how the network hardware looks to it. This is a “virtual network” in the sense that the actual hardware constituting the network is not visible to the application, only the pathways open to it, including speeds, reachable locations, and resources such as storage. The Control Plane knows about each application’s needs. The Control Plane sends signals to the Data Plane to reconfigure switches or other hardware to meet (or better meet) all application needs. This logically (but not physically) reconfigures the network, so that the new network looks like a different physical network to applications, including networked resources such as storage. That is, no cables are changed, and no new hardware is installed. Only packet routing, storage, etc. are changed under software control. So with this kind of virtualization, the application “sees” a different network, and the application’s data flows through the network now get expedited, or it has access to more storage, for example. Refer to Figure 6, which shows how the SDN elements sit between applications and underlying network hardware.

Figure 6. SDN Network Configuration. (Source: ADVA Optical Networks)

The interface between SDN controllers and applications is called the “Northbound Interface”, and that between the SDN controller and the network hardware is called the “Southbound Interface”.

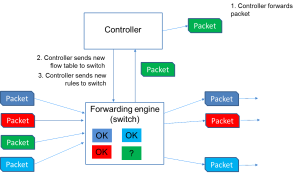

How SDN Operates on Flows

As indicated above, SDN operates on “flows”. A flow describes a set of packets transferred from one network endpoint to another, which could include TCP/UDP port pairs, VLAN endpoints, or L3 tunnel endpoints. Most networks and data centers have a set of flows that includes most of their workload, so use of flows to control the network or data center configuration makes sense. In the case of SDN, a flow table is put on each packet handling device (SDN controlled device). When a packet comes in, flow table lookup is done to determine action. This is analogous to label switching in MPLS, and is done at line speed. In this way data is switched through the network. Separately an SDN controller calculates (and optimizes) flows and flow tables, based on knowledge of network capability and application needs, though this is not done at line speed.

Figure 7. Operation of SDN

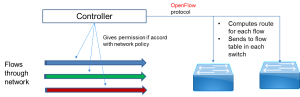

OpenFlow and What It Does

OpenFlow is a public domain protocol that is often used as the network management protocol in SDN installations. Refer to Figure 7.

OpenFlow enables the functionality that SDN is intended to implement. Specifically, OpenFlow:

- Provides access to forwarding plane of a router or switch over the network

- Allows path of data packets within network to be determined by software on at least two routers

- Designed for network traffic management between switches, routers of different models and vendors

- Separates programming of switches and routers from their hardware

- No hardware configuration needs to be done

- All control can be flexibly attained through software

How OpenFlow Works

OpenFlow works by modifying a switch’s packet forwarding tables: adding, modifying and removing packet matching rules and actions. Routing decisions are made periodically or ad hoc by the SDN controller. They are translated into rules and actions with adjustable lifespans, and deployed to switch’s flow tables. Actual forwarding of matched packets is handled at wire speed by switch for duration of the rules. Unmatched packets (packets for which no flow is assigned) are forwarded to the SDN controller, which must determine what to do with the packets. Figure 8 shows the action of the controller and switch.

Figure 8. SDN Controller and Switch

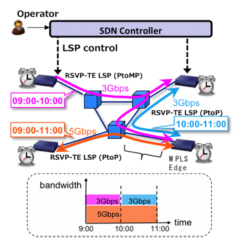

Future of SDN

One scenario is that SDN will expand from data centers to the WAN. In this scenario, RSVP-TE LSPs are dynamically created and deleted at specified times by SDN controller. These LSPs will have bandwidth and source/destinations specified. The SDN controller monitors traffic and utilization of network in real-time in order to optimize network performance. The SDN controller then controls MPLS edge routers so that RSVP-TE is signaled accurately at the necessary times. See Figure 9 for an illustration of this scenario

Figure 9. Scenario for Future Expansion of SDN into WAN

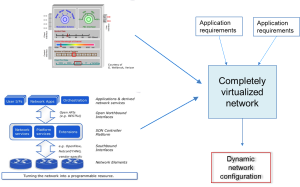

Another scenario involves a merging of local (data center) and WANs, as resources required for application become more scattered. The growth of cloud computing (actually much more than computing) also feeds into this model. In this scenario, all network resources are virtualized and controllable, as illustrated in Figure 10.

Figure 10. Hypothetical Completely Virtualized SDN Environment

Network Functions Virtualization (NFV)

What Is NFV

NFV concentrates more on network functionality. As with SDN, its name is appropriate: Network Functions Virtualization uses virtualization of network components to create functionality. To put it simply, white boxes or bare metal are used to carry out key network functions such as switching, routing, and firewalls under software control. These virtualized devices can be chained together to create communications links and services. In this way, by virtualization of this functionality, a network can be reconfigured quickly and new services provisioned rapidly.

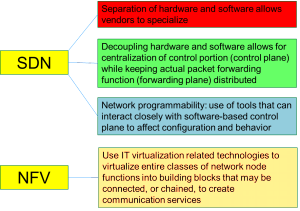

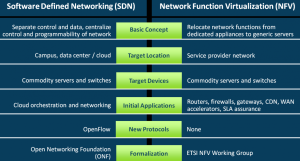

How NFV Is Related to SDN

NFV’s relationship with SDN is shown in Figure 11.

Figure 11. Relationship of SDN and NFV

What NFV Does

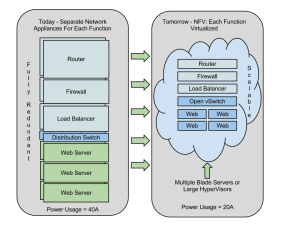

As noted in the figure, NFV is intended to virtualize entire classes of network node functions into building blocks that may be connected, or chained, to create communication services. Due to advances in speed and capability of computer hardware, which we see in “white boxes”, any service now delivered on proprietary, application specific hardware should be doable on a virtual machine. Essentially: Routers, Firewalls, Load Balancers and other network devices can all run on commodity hardware in virtualized fashion. So NFV principles are:

- Consolidate many network equipment types onto industry standard high volume servers, switches and storage

- Implement network functions in software

- Can run on a range of industry standard server hardware

- Can be moved to, or instantiated in, various locations in network as required, without need to install new equipment

- NFV concept based on building blocks of virtualized network functions (VNFs)

- VNFs combined to create full-scale networking communication services

- VNFs handle specific network functions that run in one or more virtual machines on top of hardware networking infrastructure

NFV Advantages

- Virtualization: Use network device without worrying about where it is or how it is constructed

- Orchestration: Manage thousands of devices efficiently

- Programmable: Change behavior on the fly

- Dynamic scaling: Change size, quantity

- Performance: Optimize network device utilization

- Openness: Full choice of modular plug-ins

See Figure 12 for a view of NFV as virtualized network appliances.

Figure 12. Virtualization in NFV (Source: Steve Noble)

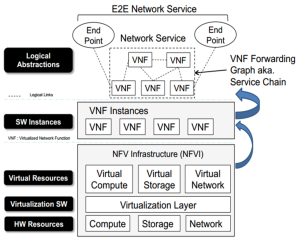

Service Function Chaining.

Service function chaining is how virtualized network services are assembled into a desired network service. First define an ordered list of a network services (e.g. firewalls, NAT, QoS) needed by a customer or group of customers. These services are called “Virtual Network Functions” (VNFs). They are “stitched” together in a network to create a service chain, i.e., a path through which packets with a certain tag flow. The VNFs themselves are created through virtualization, with a virtualization software layer on top of underlying physical hardware, and through use of white boxes. This is illustrated in Figure 13.

Figure 13. Chaining in NFV

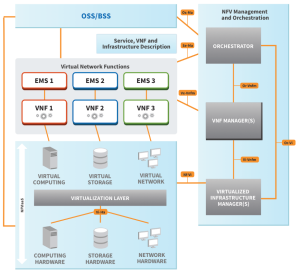

NFV Reference Architecture

The European Telecommunications Standards Institute (ETSI) has done much of the development work on NFV. Their “reference architecture” illustrates the basic components of an NFV installation. It shows the Operational Support System/Business Support System (OSS/BSS), which utilizes the virtualized functionality, the virtual network functions, and the virtualization layer that turns physical hardware into virtual functions such as computing, storage, and network connectivity. The Management and Orchestration component, known as “MANO”, is what controls the virtualization and thus the functionality seen by users. The reference architecture is shown in Figure 14. Note that practical implementations do not always separate the functions shown in the MANO.

Figure 14. NFV Reference Architecture from ETSI

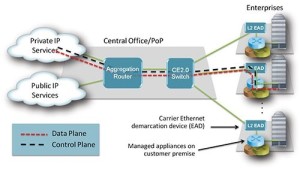

NFV Example

Consider the case of an Enterprise service consisting of managed router and managed VPN, as shown in Figure 15. If additional service are needed, the Service Provider dispatches a technician on-site to install, configure, and test the new device(s) needed, e.g., new firewall.

Figure 15. Old Way of Changing Service

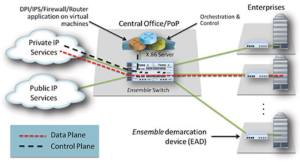

With NFV, matters are much simpler and faster. The IT manager logs onto a self-service portal and orders the additional managed firewall service. The NFV software takes it from there: portal sends a service order to the NFV system, which installs firewall virtual function on an available virtual machine in the central office or PoP. It reconfigures metro aggregation switch to steer appropriate packet flows to virtual function, and updates billing record in back office billing system, as shown in Figure 16.

Figure 16. Example with NFV

More on Relationship between SDN and NFV

SDN comes out of large-scale IP infrastructures. It is intended to establish and exercise central control over packet forwarding, simplify traffic management, and achieve operational efficiencies, primarily in a large (Enterprise-scale) data center. It utilizes an open networking environment and elements such as switches, servers and storage configured and managed centrally. These elements run on standard hardware components (“white boxes”). There is separation of network control logic from physical routers and switches that forward traffic.

NFV Originated from SDN. It was developed for service providers interested in facilitating deployment of new network services. NFV virtualizes networking devices and appliances. The goal is to avoid proliferation of physical devices to fill specialized roles such as routing, switching, content filter, spam filtering, and load balancing. The virtual implementations provide important network functions instead of specialized physical devices. The goal is to achieve WAN acceleration and optimization, reduce development/deployment costs and risks, and handle unified threat management.

The current relationship between SDN and NFV is summarized in Figure 17. In the future, as discussed earlier, the functionality of these two will likely merge. In fact that trend is well underway with large-scale providers such as Google and Amazon.

Figure 17. Current Relationship of SDN and NFV (Source: Overture Networks)

Summary

SDN and NFV have been developed to help meet the challenge of increasing demand for services and user expectation of rapid provisioning and universal availability. Both rely on virtualization, the ability to make a piece of equipment look like another via the magic of software. What makes this cost-effective is the availability of inexpensive but powerful computing units that can easily be programmed to take on many different functions such as switches, firewalls, and routers.

SDN was originally developed to address the problems of large data centers, where virtualization of hardware and operating system is important (think cloud computing). It will likely spread to other areas, including the WAN, where virtualization and the ability to dial up bandwidth and related characteristics would be extremely valuable as well. NFV is an outgrowth of SDN in many respects, concentrating on backbone networks, where the need to rapidly reconfigure resources is key.

Both SDN and NFV are conceptualized in terms of planes, i.e., the control plane or the hardware/software that configures and controls a network, the data plane that does the actual work, and the management plane that sets network policies such as services offered, performance specs, and pricing. The control plane looks to optimize network flows, and creates new ones when needed. It can also define new services using existing resources or by reprogramming hardware.

SDN and NFV are here to stay and grow and may ultimately merge into a unified means of creating, controlling , and allocating network resources from the LAN to the WAN.

Editor’s Note: Eogogics offers courses on SDN and NFV as well as related areas of Cloud Computing and IP networks. We also offer market intelligence research publications on SDN, NFV, and related topics.

Sorry, comments for this entry are closed at this time.